In the early hours of March 15, OpenAI unveiled its multimodal large language model, GPT-4, instantly igniting global discourse. Its predecessor—GPT-3.5—was the engine behind ChatGPT, which amassed 100 million users in just two months after its launch. Now, GPT-4 not only delivers comprehensive upgrades over GPT-3.5 but also introduces image-processing capabilities, sparking worldwide speculation about the future of work and reigniting debates on the evolving relationship between humans and technology.

Just as restless as global users were Microsoft and Google—Microsoft swiftly embraced the new model, while Google launched an immediate counteroffensive. The AI storm triggered by GPT-4 has only just begun.

Leaving Its Predecessor “In the Dust”

Compared to its predecessor, GPT-4 supports multimodal inputs, significantly enhances natural language understanding and generation across multiple dimensions, and improves safety measures.

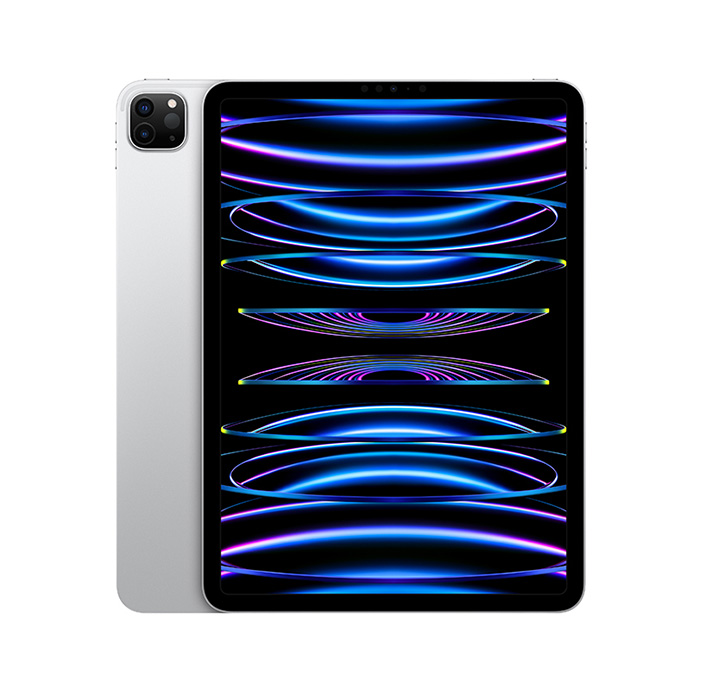

Unlike GPT-3.5, which focused solely on text, GPT-4 can process images and mixed-content documents—such as charts, screenshots, or photos with embedded text—and respond in natural language. For example, when shown a photo of a helium balloon tethered by a thin string and asked, “What happens if the string is cut?” GPT-4 replied, “The balloon will float away.” This demonstrates that the model doesn’t just recognize objects in an image but also understands their physical relationships.

In its core competency—natural language understanding and generation—GPT-4 shows marked improvements over GPT-3.5.

First, it better follows complex instructions. In one OpenAI demo, engineers tasked the model with summarizing a passage into a single sentence where every word had to start with the letter “G.” GPT-3.5 ignored the constraint entirely and produced a standard summary. GPT-4, however, generated a response that largely met the requirement—though it initially included the term “AI.” When the user clarified that “AI” doesn’t begin with “G,” GPT-4 immediately revised it to “global.”

Second, GPT-4 handles nuanced, complex scenarios far more effectively. In a simulated bar exam, GPT-4 scored in the top 10% of test-takers, whereas GPT-3.5 ranked in the bottom 10%—highlighting GPT-4’s near-human performance in professional domains.

Moreover, GPT-4 can read, analyze, or generate texts up to 25,000 words—far surpassing ChatGPT’s 3,000-word limit—enabling applications such as long-form content creation, extended conversations, and document search and analysis.

On the critical front of safety, GPT-4 is 82% less likely to respond to requests for prohibited content and 40% more likely to produce factually accurate responses.

Deeper Integration with Microsoft’s Search and Cloud Services

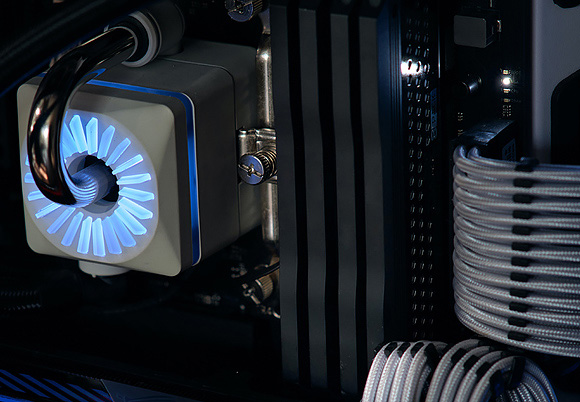

Immediately after GPT-4’s release, Microsoft “claimed” the model, announcing that its Bing search engine was already running on GPT-4. OpenAI also revealed that GPT-4 was trained on Microsoft Azure’s AI supercomputer and will be delivered globally via Azure’s AI infrastructure—a system co-designed by OpenAI and Azure specifically for OpenAI’s deep learning architecture.

Microsoft’s partnership with OpenAI began in 2019. In January 2023, the companies announced the third phase of their collaboration, focusing on massive-scale supercomputing, novel AI experiences, and Azure as OpenAI’s exclusive cloud provider. Supporting OpenAI’s research with this supercomputer is a key pillar of their joint roadmap.

Microsoft’s strategic direction is clear from its commitments: it plans to deploy OpenAI models across both consumer and enterprise products and introduce new digital experiences powered by OpenAI technology. Through Azure OpenAI Service, developers gain direct access to OpenAI’s models, backed by Azure’s trusted execution environments, enterprise-grade features, and AI-optimized infrastructure and tooling—enabling them to build cutting-edge AI applications. As OpenAI’s exclusive cloud partner, Azure will support all OpenAI workloads across research, products, and API services.

Google Strikes Back

Not to be outdone, Google responded swiftly to GPT-4’s launch by opening access to the API of its large language model, PaLM, and unveiling MakerSuite—a new toolkit for developers. The PaLM API serves as the gateway to Google’s family of large models, enabling developers to integrate specialized versions optimized for content generation and chat, as well as general-purpose models fine-tuned for summarization, classification, and other tasks. MakerSuite accelerates prototyping, allowing developers to build and test applications rapidly.

On the same day, Google published a blog post announcing it would provide generative AI capabilities—including text, image, code, audio, and video generation—to developers, industries, and governments.

This isn’t Google’s first countermove against GPT. In February, it launched Bard, its ChatGPT rival, powered by LaMDA—a large language model introduced in 2020. Like GPT, LaMDA is built on the Transformer architecture but is specifically trained on open-ended dialogues (not limited to “yes” or “no” answers), aiming to generate precise, context-aware responses by discerning subtle differences across questions and replies.

Google maintains a robust portfolio of foundation models, including BERT, MUM, PaLM, Imagen, and MusicLM. Notably, the Transformer architecture underpinning most large language models—including GPT—was originally introduced by Google in 2017. BERT, Google’s first Transformer-based large model, kicked off the global arms race among tech giants in foundation model development. PaLM, released in 2022, boasts 540 billion parameters, reflecting Google’s deep and sustained investment in this field.

Much like Microsoft, Google is pursuing a dual-track strategy: consumer-facing applications (led by Search) and enterprise solutions via Google Cloud. Since BERT, Google has integrated large models into Search to help users transform raw information into actionable knowledge more efficiently. Today, Google also announced it will deploy Vertex AI and other development platforms on Google Cloud, empowering developers to build enterprise-grade generative AI applications that meet stringent security and privacy standards.

Persistent Limitations

Despite its significant advances, GPT-4 still faces notable limitations.

First, reliability remains a concern. When confronted with unfamiliar topics, GPT models may fabricate plausible-sounding but false information—a phenomenon known as AI “hallucination.”

Since most of its pretraining data cuts off in September 2021, GPT-4 lacks awareness of subsequent events and cannot learn from them. It occasionally makes basic reasoning errors or accepts incorrect user statements too readily.

Additionally, GPT-4 may make prediction errors and sometimes fails to double-check its work when uncertainty is high. Output biases also persist; although OpenAI states it has taken steps to mitigate them, full resolution will take time.

But perhaps the most pressing concern for industry and the public alike is the ethical and safety implications of GPT-4 and other large models. OpenAI acknowledges that GPT-4 and future systems could impact society in both beneficial and harmful ways. The team is collaborating with external researchers to improve its methods for understanding and evaluating these potential impacts and is developing mechanisms to assess dangerous capabilities that might emerge in future systems. In the near term, OpenAI plans to publish recommendations on how society can prepare for AI’s societal effects and forecasts of its economic implications.